Tricentis today revealed it has acquired SeaLights, a provider of a software-as-a-service (SaaS) platform that uses machine learning algorithms to keep track of changes to code.

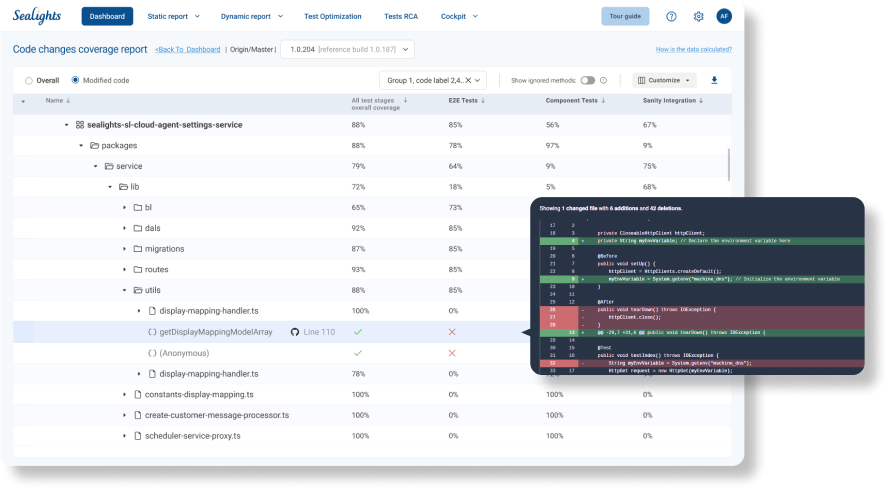

SeaLights requires agent software to be installed on the developers’ system that is then used to create metrics, traceability and other insights into code changes. That agent software then recommends which type of tests should be run based on the changes made to the code base. That capability makes it simpler to identify and close any gaps in the existing suite of tests being run.

Mav Turner, chief product and strategy officer for Tricentis, said SeaLights will make it simpler to surface the most appropriate tests based on the maps of code changes that are surfaced by those algorithms.

Tricentis, next up, plans to more tightly integrate the SeaLights agent software with its test automation framework, said Turner.

Too often today, the same tests are run regardless of what type of code is being created. Additionally, Tricentis anticipates being able to narrow the range of functional tests that need to be run to match the changes made to the underlying code, noted Turner. Many IT teams are running the full battery of tests every time a change is made regardless of how small any of those changes might be, he added.

The need for the capabilities provided by SeaLights is only going to become more crucial as developers take advantage of artificial intelligence (AI) tools to write code faster. The volume of code that needs to be continuously tested is only going to increase, said Turner.

Tricentis now has more than 3,000 customers in 19 countries and has been aggressively embedding AI capabilities across its testing portfolio using copilots that have been developed in collaboration with OpenAI. The overall goal is to make it simpler to test code as early as possible across the entire software development lifecycle without impeding the pace at which applications are being built and deployed, said Turner.

In theory, generative AI coupled with machine learning algorithms should also make it easier for DevOps teams to both reuse tests and better understand how they are being run using the summarization capabilities. Collectively, those capabilities will make it possible to run tests faster, have fewer errors, reduce costs and increase productivity. The more tests run before an application is deployed the less troubleshooting there should hopefully be once an application is deployed in a production environment.

It’s not yet clear to what degree generative AI will democratize application testing. However, as they become easier to create there should be more time to run more complex tests that might, for example, address cybersecurity issues or issues that only impact a small number of end users that have a unique use case for an application.

Most of the tests that are run today typically surface common programming mistakes that once surfaced will, with help from AI, will one day be automatically resolved as soon as they are discovered.